Chinese researchers have established CAS(ME)3, a large-scale, spontaneous micro-expression database with depth information and high ecological validity. It is expected to lead the next generation of micro-expression analysis.

CAS(ME)3 offers around 80 hours of video with over 8,000,000 frames, including 1,109 manually labeled micro-expressions and 3,490 macro-expressions, according to Dr. WANG Sujing, leader of the research team and a researcher at the Institute of Psychology of the Chinese Academy of Sciences.

As significant non-verbal communication clues, micro-expressions can reveal a person’s genuine emotional state. The development of micro-expression analysis has gained attention especially as deep learning methods have flourished in the micro-expression analysis field. However, research in both psychology and artificial intelligence has been limited by a small sample of micro-expressions.

"Data size is vital for using artificial intelligence to conduct micro-expression analysis, and the large sample size of CAS(ME)3 allows effective micro-expression analysis method validation while avoiding database bias," said Dr. WANG.

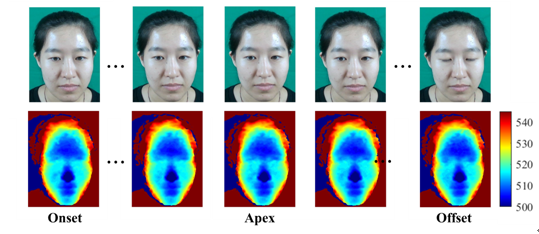

One of the highlights of CAS(ME)3 is that the samples in the database contain depth information. The introduction of such information was inspired by a psychological experiment on human visual perception of micro-expressions. Subjects were asked to watch 2D and 3D micro-expression videos and answer three questions about the video’s emotional valence, emotional type, and emotional intensity. Shorter reaction time and a higher intensity rating for 3D videos indicated that depth information could facilitate emotional recognition.

After demonstrating the effectiveness of depth information on human visual perception, the researchers continued work on intelligent micro-expression analysis. "The algorithms could be more sensitive to the continuous changes in micro-expression with the help of the depth information," said Dr. LI Jingting, first author of the study and a researcher at the Institute of Psychology.

The samples of RGB image and depth information (Subject spNO.216 in Part A: Surprise with AU R1+R2). (Image by Dr. LI Jingting)

Another highlight of CAS(ME)3 is its high ecological validity. During the elicitation process, the researchers optimized the acquisition environment to be as close to the reality as possible, with participants engaging in a mock crime and interrogation. As part of the process, video, voice, and physiological signals–including electrodermal activity, heart rate/fingertip pulse, respiration, and pulse–were collected by video recorder and wearable devices.

The experiment showed that subjects in high-stakes scenarios (e.g., committing a crime) leak more micro-expressions than those in low-stakes scenarios (e.g., being innocent). Such high ecological validity samples thus provide a foundation for robust real-world micro-expression analysis and emotional understanding.

Importantly, one of the means for alleviating the problem of small micro-expression sample size is unsupervised learning. As a form of unsupervised learning, self-supervised learning has become a hot topic. It is natural to use additional depth information or more micro-expression-related frames to generate labels and construct self-supervised learning models for micro-expression analysis. CAS(ME)3 provides 1,508 unlabeled videos with more than 4,000,000 frames, thus making it a data platform for unsupervised micro-expression analysis methods.

Researchers from the Institute of Psychology led by Professor FU Xiaolan previously released three other micro-expression databases, i.e., CASME, CASME II, and CAS(ME)2. More than 600 research teams from over 50 countries have requested these databases, and more than 80% of all micro-expression analysis articles have used at least one of them for method validation.

Using CAS(ME)3 and the previous databases, micro-expression analysis based on self-supervised learning using unlabeled data, multi-modal research as well as physiological and voice signals could be expected in the future.

This study was published on May 13 in IEEE Transactions on Pattern Analysis and Machine Intelligence and was funded by the National Natural Science Foundation of China, the China Postdoctoral Science Foundation and the National Key Research and Development Project of China.

86-10-68597521 (day)

86-10-68597289 (night)

52 Sanlihe Rd., Xicheng District,

Beijing, China (100864)