A high accuracy method for pose estimation based on rotation parameters has been proposed by scientists from Institute of Optics and Electronics (IOE) of the Chinese Academy of Sciences (CAS).

The target pose (position and attitude) estimation based on vision is key research forefront direction in optoelectronic precision measurement technology, and plays an important role in space operation, industrial manufacture and robot navigation. Especially in the field of space, the accuracy of the target pose estimation is directly related to the success of the space mission.

At present, the pose estimation technology for cooperative goals is very mature, and has been widely used in the Industry, medicine and space fields. However, due to the lack of prior information about cooperative targets for most targets, it is a huge challenge for the target pose estimation.

Recently, under the support of the National Natural Science Foundation and the Youth Innovation Promotion Association of CAS, Dr. ZHAO Rujin's research team proposed a high accuracy method for pose estimation based on rotation parameters.

The researchers first made the rotation matrix parameterized by Cayley-Gibbs-Rodrigues (CGR) to transform the pose estimation problem into the optimization problem of the parameter rotation.

Then, based on Gr![]() bner basis method, they obtained the pose by optimizing the rotation parameters to realize the pose estimation of the number of points under different configurations.

bner basis method, they obtained the pose by optimizing the rotation parameters to realize the pose estimation of the number of points under different configurations.

Compared with the trandition method, this new method is more versatile, and achieves higher level of accuracy.

The results are expected to be applied to any target pose estimation in the future space missions, and extend to industrial manufacturing, medical assistance, and robot navigation.

The research was published in Measurement entitled "A high accuracy method for pose estimation based on rotation parameters".

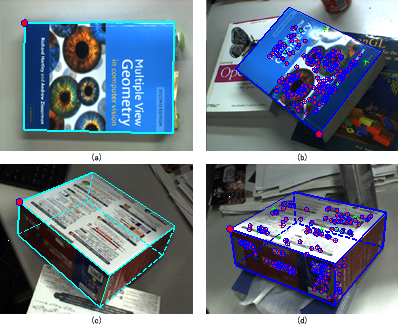

Experiment results using real images. (Image by ZHAO Rujin)

86-10-68597521 (day)

86-10-68597289 (night)

86-10-68511095 (day)

86-10-68512458 (night)

cas_en@cas.cn

52 Sanlihe Rd., Xicheng District,

Beijing, China (100864)